A/B testing helps startups make quick, clear decisions. It lets you test and compare parts of your product. This way, you see what changes boost your business. With careful testing, you turn guesses into facts and make smarter choices.

It's key to growing your startup smartly. Use it to tweak your message, welcome new users better, set the right prices, and get more people using your service. Every test teaches you something new. And those lessons can help you grow even faster.

This testing leads to quicker updates, spending less to get customers, making more money from them, and knowing what to focus on next. By checking your ideas first, you avoid wasting time and money. You make decisions based on what really works.

There are great tools for testing. Optimizely, VWO, and AB Tasty for testing. LaunchDarkly lets you test features. Amplitude and Mixpanel track what users do. Heap and PostHog make tracking events easier. BigQuery and Snowflake help you look at all your data together.

Plan your tests well. Keep a record of your testing rules, what you think will happen, and how to tell if you're right. Keep track of your tests to learn more over time. This way, your ability to test and learn grows stronger.

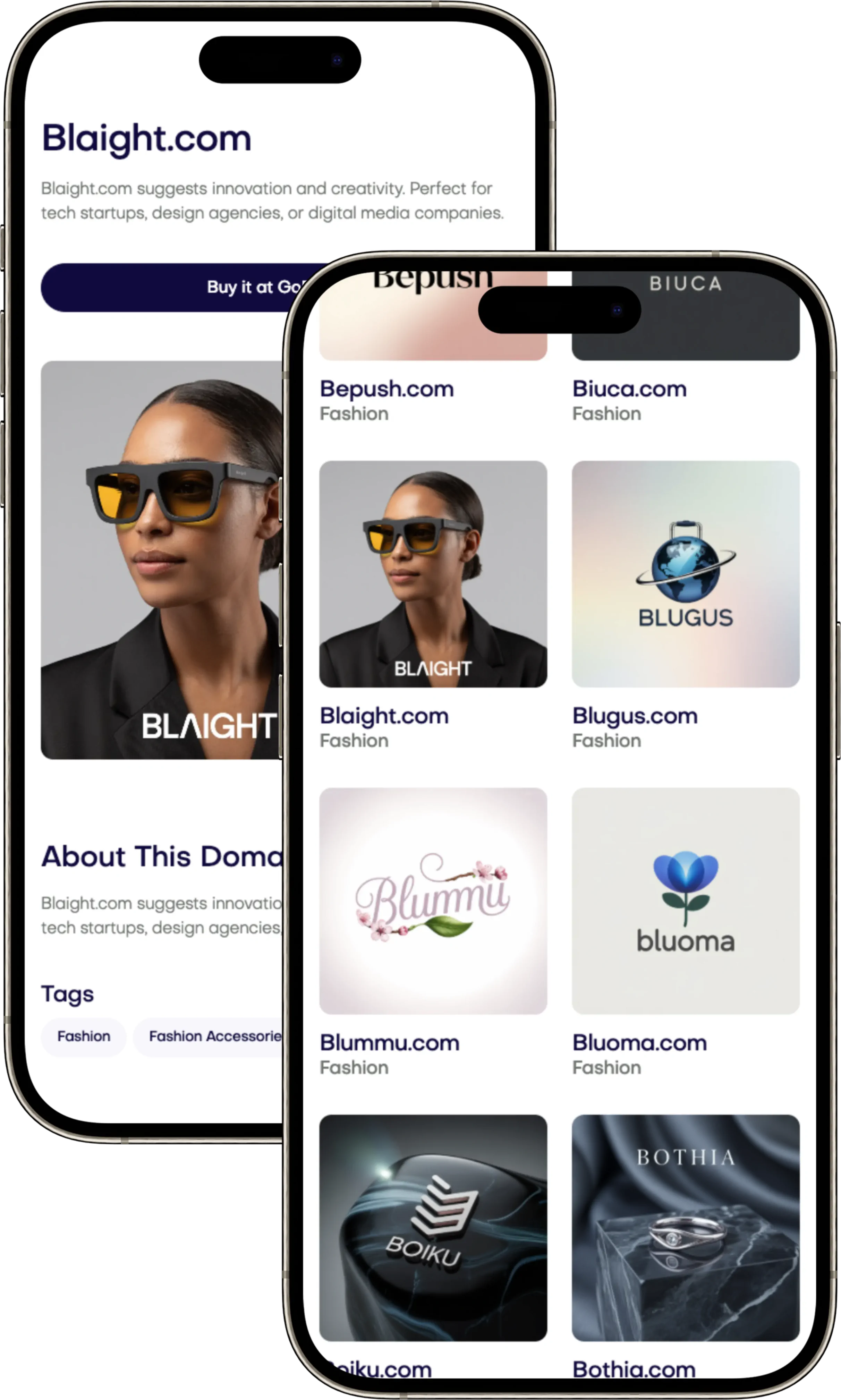

As you test and learn, build a strong brand, too. You can find great names for your brand at Brandtune.com.

Why A/B Testing Matters for Early-Stage Growth

Your business can move quicker than the big guys. A/B testing changes speed into an advantage. You learn from what people actually do, not just guesses. This helps your business grow right and keeps your team on track with real customer actions.

Try things out to check your big guesses: like if people like your offer, how they start, and what they think of your prices. Look at what happens, not just what people say. This helps avoid doing the same work twice and focuses your resources on what really works.

Put your resources where the data says. Choose options that make more people start and keep using your service. This helps spend money wisely, making it cheaper to find new customers and keeping them longer. When it costs less to get customers and they stick around, everything works better.

Set a regular schedule, like every week or two. Every attempt, whether it works or not, teaches you something. You'll know your customers better and improve your messages, design, and prices. That means more people stay and fewer leave, as what you offer fits what they want.

As time goes on, making choices based on data really adds up. Teams that keep testing and learning do better in getting and keeping users, and making more money efficiently. This ongoing process of learning and improving turns small discoveries into big advantages as you grow.

Core Principles of Experimental Design for Product Teams

Your product team can move faster when every change follows certain steps. Start small, express your goal clearly, and let data show the way. Focus on: one decision, one clear result, and one action to take.

Defining clear hypotheses and success criteria

Write a test hypothesis like this: If we change X for audience Y, then Z will get better because of a specific reason. Set early goals, like a +8% rise in signups, and make decision rules before starting. This avoids changes later and keeps choices straightforward.

Keep your test to one main change. Only group things if they really go together. Pick who and what you're targeting carefully. Guess the change you can see from where you start, and figure out how many people you need with tools like Evan Miller’s calculators or Optimizely’s Stats Engine. This planning helps you make strong conclusions.

Choosing primary and guardrail metrics

Pick one key metric that shows how you're doing, like how many new users start using your product or buy something. Choose a metric that shows clear changes and isn't noisy. Connect smaller goals, like click rates or how much people scroll, to big aims like keeping users and making more money. This helps you avoid changing your focus too much and makes your goals clearer.

Watch other important metrics to not cause problems: how often people leave quickly, how long pages take to load, errors, support tickets, and how many leave or unsubscribe. If a change makes these worse, stop and think it over. Aim for balance to keep users' trust while you learn.

Avoiding common experimentation biases

Make rules for when to stop to avoid looking too early or choosing what to report after seeing the results. If you need to check progress, try sequential tests or Bayesian methods. Test over enough time to even out newness and seasonal changes, watching for unexpected high or low times. If something seems off with how many are in each group, look into it.

Keep your test clean by putting users into groups well and using stable IDs so people only see one version. If you’re testing lots of versions, manage the risk of finding things by chance by planning ahead, using correction methods, or controlling the rate of false findings. These steps help fight bias and keep your results trustworthy.

Startup A B Testing

Experiments that match your strategy can help you grow fast. See Startup A B Testing as an efficient engine. It has short cycles, clear goals, and smart test planning. Quick wins help you, and quick losses teach you.

Selecting impactful test ideas from a lean backlog

Get ideas from real user actions. Use user talks, Hotjar or FullStory replays, and heatmaps. Look at where users leave, support complaints, and why they leave surveys. Add Jobs-to-Be-Done insights for deeper understanding.

Focus tests on areas that matter a lot. Look at how clear your offer is, how you talk about prices, and first steps for users. Think about when you ask for money, how you write emails and notifications, and guiding users in your app. Make sure everything in the backlog is clear and linked to what you want to measure.

Prioritization frameworks: ICE, PIE, and beyond

Use the ICE score—Idea, Confidence, Ease—and PIE—Potential, Importance, Ease—to choose what to do first. Match scores with past results and the real work needed to avoid picking just the exciting ideas.

Add RICE, considering Reach, Impact, Confidence, and Effort. Check the list every week to stay on track and remove old ideas. This makes a list that balances the size of your audience and the cost of doing something.

Designing rapid, low-cost experiments

Try out quick tests with no-code options in Webflow or Unbounce, small text changes, and different email subjects. Use different headlines and calls to action when you have enough visitors. Otherwise, keep changes small.

Start with areas that many people see. Try big changes to see clear results. Use control groups when adding new features to know their true effect, avoiding confusion from time-specific events or other efforts.

Interpreting results with practical significance

Look beyond just statistics to see real-world effects: how changes touch sales, getting started, and keeping users. Balance what you gain against the cost and any new problems introduced.

Make sure changes last by testing more or slowly adding them. Remember and share what you learn, not just the successes or failures. This makes your future tests better and boosts your Startup A B Testing efforts.

Building a Reliable Experimentation Pipeline

Your business needs an experimentation system that is both fast and under control. It's important to do testing on both the server side and client side. This lets you check ideas on both web and mobile platforms. You should use feature flagging tools like LaunchDarkly, Split, or Optimizely Rollouts. These tools help control who sees what, enable safety features, and keep deploy separate from release.

Make sure your data quality is top-notch from the beginning. Have standard ways of recording events, using clear names, and keeping track of

A/B testing helps startups make quick, clear decisions. It lets you test and compare parts of your product. This way, you see what changes boost your business. With careful testing, you turn guesses into facts and make smarter choices.

It's key to growing your startup smartly. Use it to tweak your message, welcome new users better, set the right prices, and get more people using your service. Every test teaches you something new. And those lessons can help you grow even faster.

This testing leads to quicker updates, spending less to get customers, making more money from them, and knowing what to focus on next. By checking your ideas first, you avoid wasting time and money. You make decisions based on what really works.

There are great tools for testing. Optimizely, VWO, and AB Tasty for testing. LaunchDarkly lets you test features. Amplitude and Mixpanel track what users do. Heap and PostHog make tracking events easier. BigQuery and Snowflake help you look at all your data together.

Plan your tests well. Keep a record of your testing rules, what you think will happen, and how to tell if you're right. Keep track of your tests to learn more over time. This way, your ability to test and learn grows stronger.

As you test and learn, build a strong brand, too. You can find great names for your brand at Brandtune.com.

Why A/B Testing Matters for Early-Stage Growth

Your business can move quicker than the big guys. A/B testing changes speed into an advantage. You learn from what people actually do, not just guesses. This helps your business grow right and keeps your team on track with real customer actions.

Try things out to check your big guesses: like if people like your offer, how they start, and what they think of your prices. Look at what happens, not just what people say. This helps avoid doing the same work twice and focuses your resources on what really works.

Put your resources where the data says. Choose options that make more people start and keep using your service. This helps spend money wisely, making it cheaper to find new customers and keeping them longer. When it costs less to get customers and they stick around, everything works better.

Set a regular schedule, like every week or two. Every attempt, whether it works or not, teaches you something. You'll know your customers better and improve your messages, design, and prices. That means more people stay and fewer leave, as what you offer fits what they want.

As time goes on, making choices based on data really adds up. Teams that keep testing and learning do better in getting and keeping users, and making more money efficiently. This ongoing process of learning and improving turns small discoveries into big advantages as you grow.

Core Principles of Experimental Design for Product Teams

Your product team can move faster when every change follows certain steps. Start small, express your goal clearly, and let data show the way. Focus on: one decision, one clear result, and one action to take.

Defining clear hypotheses and success criteria

Write a test hypothesis like this: If we change X for audience Y, then Z will get better because of a specific reason. Set early goals, like a +8% rise in signups, and make decision rules before starting. This avoids changes later and keeps choices straightforward.

Keep your test to one main change. Only group things if they really go together. Pick who and what you're targeting carefully. Guess the change you can see from where you start, and figure out how many people you need with tools like Evan Miller’s calculators or Optimizely’s Stats Engine. This planning helps you make strong conclusions.

Choosing primary and guardrail metrics

Pick one key metric that shows how you're doing, like how many new users start using your product or buy something. Choose a metric that shows clear changes and isn't noisy. Connect smaller goals, like click rates or how much people scroll, to big aims like keeping users and making more money. This helps you avoid changing your focus too much and makes your goals clearer.

Watch other important metrics to not cause problems: how often people leave quickly, how long pages take to load, errors, support tickets, and how many leave or unsubscribe. If a change makes these worse, stop and think it over. Aim for balance to keep users' trust while you learn.

Avoiding common experimentation biases

Make rules for when to stop to avoid looking too early or choosing what to report after seeing the results. If you need to check progress, try sequential tests or Bayesian methods. Test over enough time to even out newness and seasonal changes, watching for unexpected high or low times. If something seems off with how many are in each group, look into it.

Keep your test clean by putting users into groups well and using stable IDs so people only see one version. If you’re testing lots of versions, manage the risk of finding things by chance by planning ahead, using correction methods, or controlling the rate of false findings. These steps help fight bias and keep your results trustworthy.

Startup A B Testing

Experiments that match your strategy can help you grow fast. See Startup A B Testing as an efficient engine. It has short cycles, clear goals, and smart test planning. Quick wins help you, and quick losses teach you.

Selecting impactful test ideas from a lean backlog

Get ideas from real user actions. Use user talks, Hotjar or FullStory replays, and heatmaps. Look at where users leave, support complaints, and why they leave surveys. Add Jobs-to-Be-Done insights for deeper understanding.

Focus tests on areas that matter a lot. Look at how clear your offer is, how you talk about prices, and first steps for users. Think about when you ask for money, how you write emails and notifications, and guiding users in your app. Make sure everything in the backlog is clear and linked to what you want to measure.

Prioritization frameworks: ICE, PIE, and beyond

Use the ICE score—Idea, Confidence, Ease—and PIE—Potential, Importance, Ease—to choose what to do first. Match scores with past results and the real work needed to avoid picking just the exciting ideas.

Add RICE, considering Reach, Impact, Confidence, and Effort. Check the list every week to stay on track and remove old ideas. This makes a list that balances the size of your audience and the cost of doing something.

Designing rapid, low-cost experiments

Try out quick tests with no-code options in Webflow or Unbounce, small text changes, and different email subjects. Use different headlines and calls to action when you have enough visitors. Otherwise, keep changes small.

Start with areas that many people see. Try big changes to see clear results. Use control groups when adding new features to know their true effect, avoiding confusion from time-specific events or other efforts.

Interpreting results with practical significance

Look beyond just statistics to see real-world effects: how changes touch sales, getting started, and keeping users. Balance what you gain against the cost and any new problems introduced.

Make sure changes last by testing more or slowly adding them. Remember and share what you learn, not just the successes or failures. This makes your future tests better and boosts your Startup A B Testing efforts.

Building a Reliable Experimentation Pipeline

Your business needs an experimentation system that is both fast and under control. It's important to do testing on both the server side and client side. This lets you check ideas on both web and mobile platforms. You should use feature flagging tools like LaunchDarkly, Split, or Optimizely Rollouts. These tools help control who sees what, enable safety features, and keep deploy separate from release.

Make sure your data quality is top-notch from the beginning. Have standard ways of recording events, using clear names, and keeping track of

Start Building Your Brand with Brandtune

Browse All Domains